Human Motion Synthesis with Diffusion Models in Real Environments

Abstract

This was a group project for the Digital Humans class at ETH. We extended a method called Diffusion Noise Optimization (DNO) and generate realistic human motions in real-world scenes with multiple, complex-shaped obstacles. Our project was to build on top of the Diffusion Noise Optimization paper and integrate the diffusion generation with realistic environments.

My Contributions

- All the work to get the model initially running and training against a Signed Distance Function representing an environment

- Integrating with the real world scans

- Creating an automated pipeline, enabling bulk running of different environments and configurations

- Implementing evaluation metrics

- The ablation study

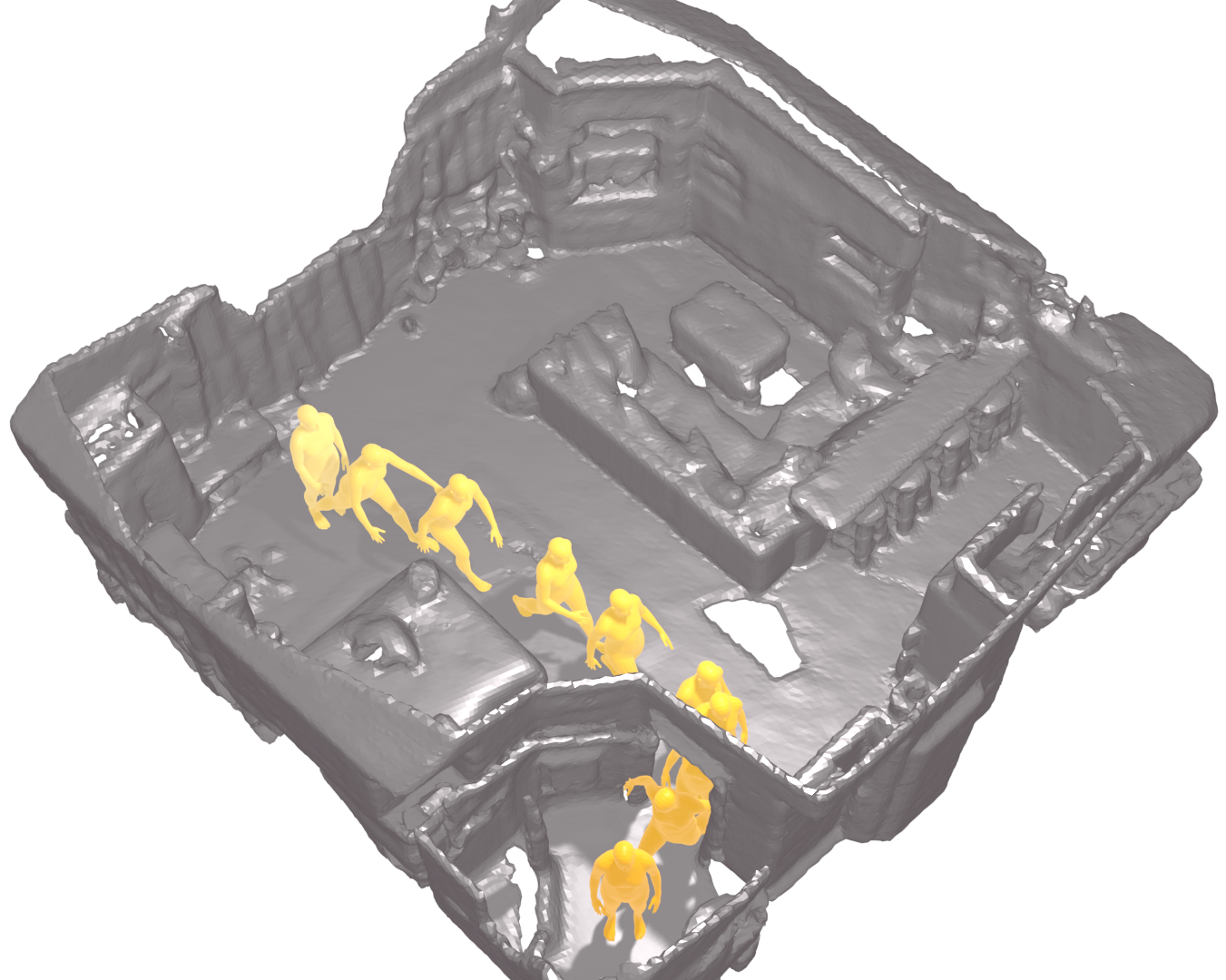

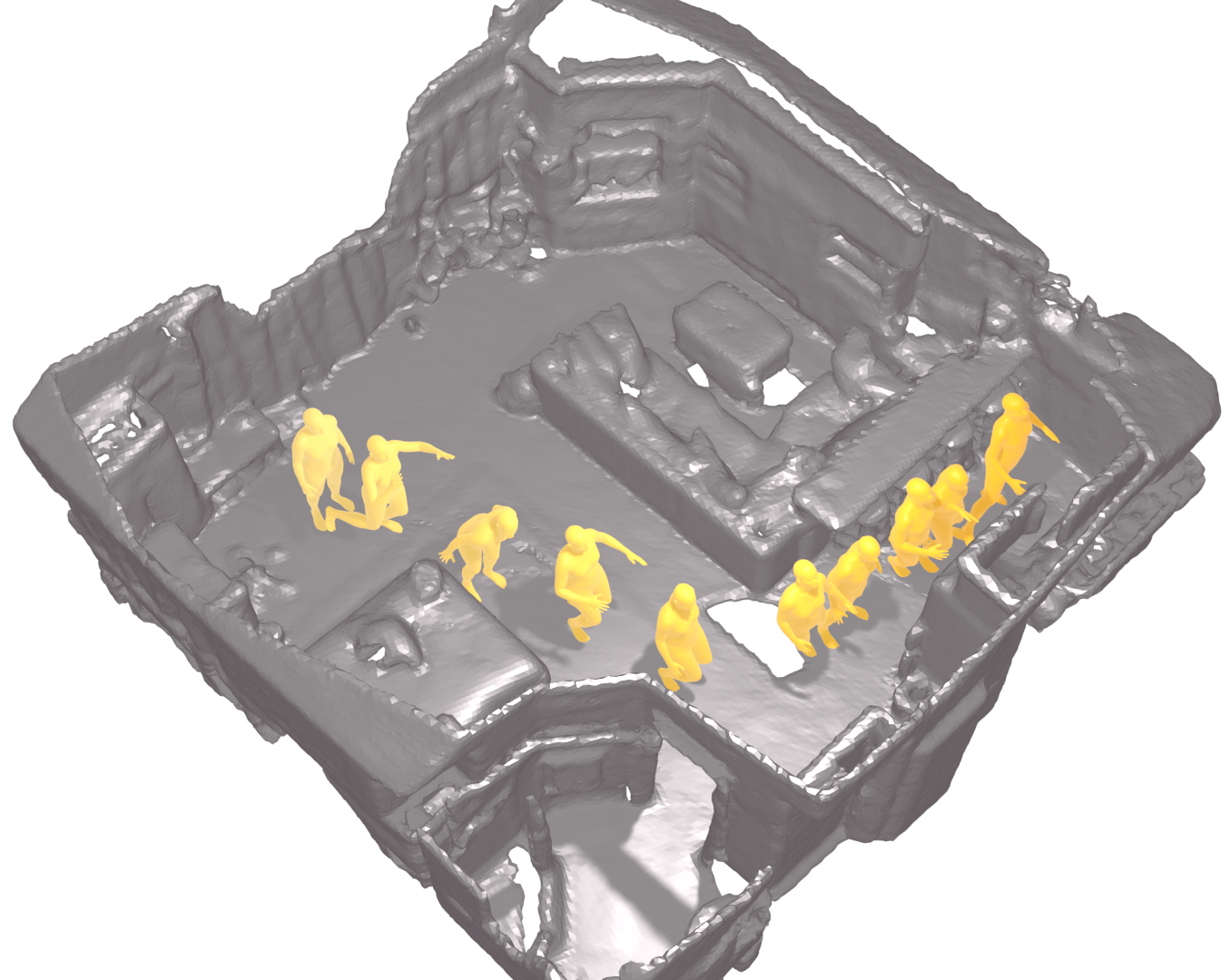

Results

- Implemented three different SDFs and tested on multiple scenes

- Able to reach the goal 94% of the time with a 20% increase in foot skating ratio compared to DNO

- Saw an average 20% loss in semantic accuracy preservation compared to DNO

- This is expected, as the obstacles were more complicated than in DNO, and there’s a tradeoff between preserving accuracy and avoiding obstacles

- Accurately preserved semantic content for all the tested behaviors: Walking, Crawling, Jumping, and Walking with Raised Hands

Example Results

*Note: The code is private is because we were given early code access and couldn’t fork the actual repo. I don’t want to publish it publicly unforked and potentially cause any confusion or not give proper credit. The now-public original DNO code can be found here. I am happy to give access to my repo if you are curious to evaluate my own personal work.